Overview

Compound Eye is building a vision system for machines. Autonomous vehicles and other robots need to understand the world in 3D to interact effectively with their surroundings. While today’s robots often rely on cameras and active sensors like lidar and radar, these systems struggle in unstructured environments like homes, sidewalks, and city streets.

In contrast, humans and animals—using only vision—navigate complex spaces with ease. Compound Eye simulates nature’s depth perception capabilities to enable robots to do the same, using RGB cameras and advanced software.

I worked as a Product Designer to support the company’s mission of enabling depth perception through vision-only systems. My focus was on designing interfaces for 3D data annotation, depth editing, and analysis tools used by internal teams and external testers.

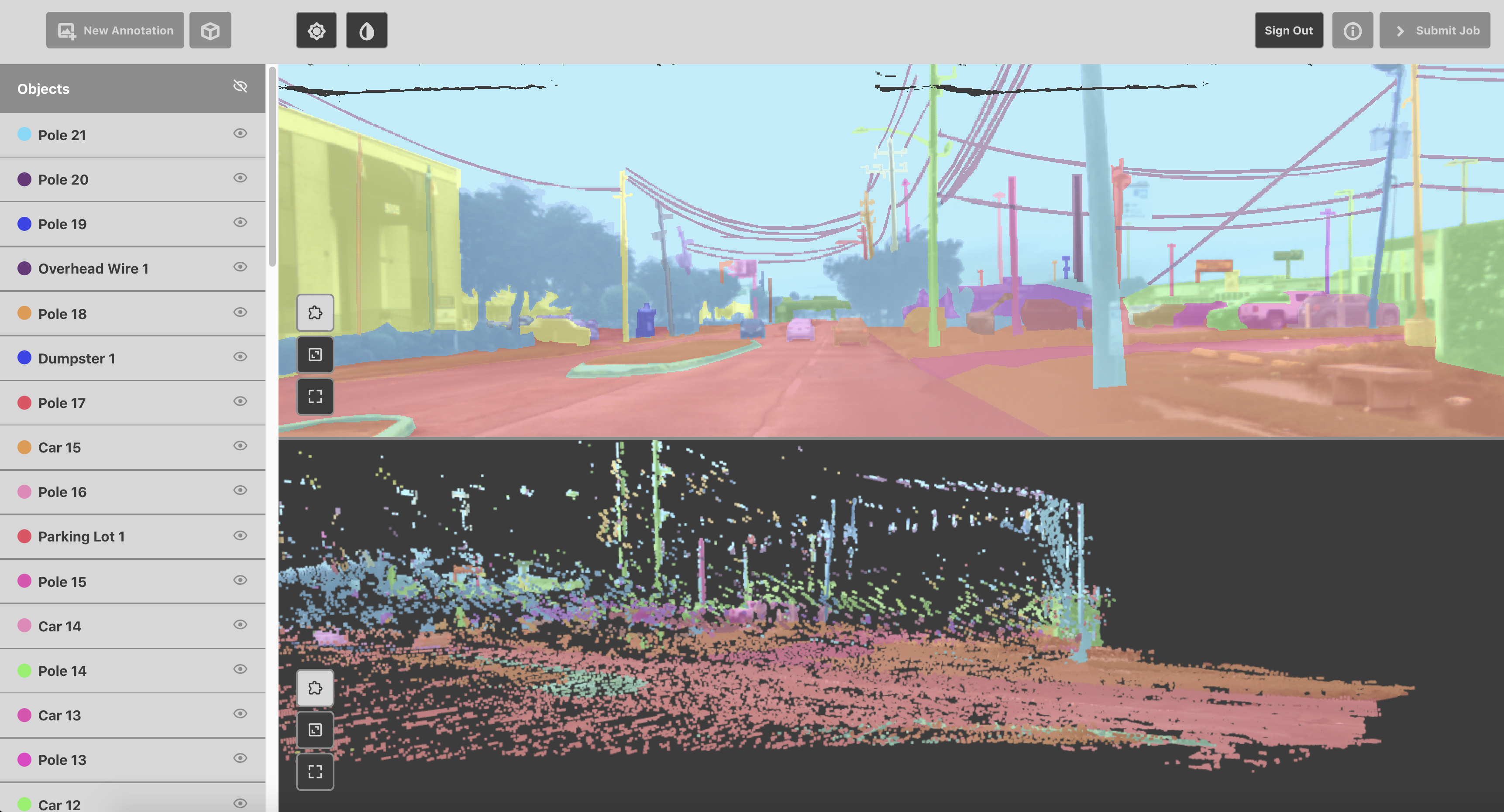

After evaluating over 200 commercial annotation tools, the team realized most were designed for sparse 3D datasets and didn’t meet the needs of their high-resolution stereo system. Instead of adapting existing tools, they chose to build their own.

However, with a small internal team and limited bandwidth, they needed efficient tools that would reduce annotation time and increase accuracy—without diverting engineering resources from core development.

“Compound Eye tried to outsource the annotation work to other vendors but, due to poor quality, high costs, and restrictive tooling, decided not to do so.”— CloudFactory Case Study

VIDAS Devkit - Developed UI components for the VIDAS perception platform, including:

• Point cloud/depth map editing tools

• Cuboid creation and annotation workflows

• Live preview UI for wireless camera capture

• Ground truth comparison via Inspector tool

User Goals:

• Fast annotation with minimal training

• Real-time editing and feedback

• Scalable workflow for both on-road and off-road data

Tech Behind the Vision

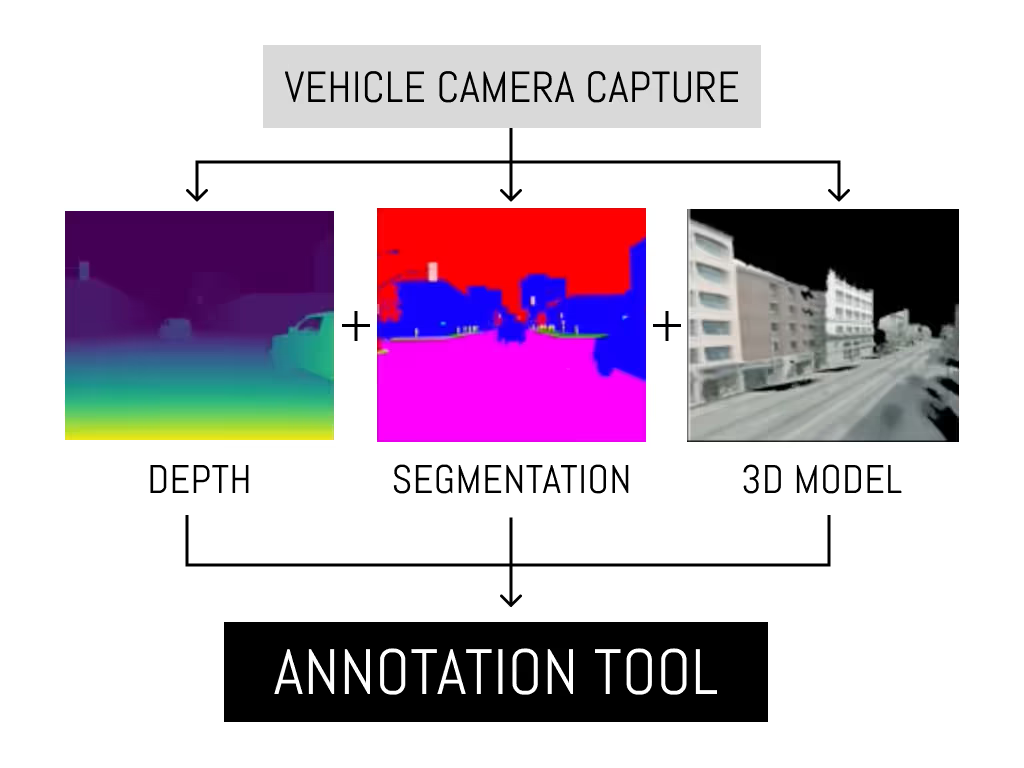

Compound Eye’s system fuses parallax and semantic cues to compute real-time depth at every pixel: they point two or more regular cameras at a scene, determine distance using both parallax and semantic cues, and fuse the results to get accurate depth at every pixel—all in real time.

- Dense Depth + Per-Pixel Semantics + Optical Flow

- Operates in real-world, unstructured environments

- Embedded, low-latency, vehicle-agnostic system

- Developer access via the VIDAS SDK

Impact

The custom-built annotation tools supported the rapid development of Compound Eye’s 3D perception models, allowing internal teams to generate training data efficiently and accurately—without relying on external vendors.

Semantic Segmentation UI

3D Data Annotation

"After researching more than 200 commercially available annotation tools, the team found that most were built for sparse 3D datasets. Instead of buying off the shelf, they decided to build a tool to power their state-of-the-art perception platform. But even with this valuable resource, the company’s small team was still constrained by in-house capacity. And they didn’t want to spend time on tedious annotation tasks; they wanted to focus on the company’s mission of building a full 3-D perception solution using cameras. Compound Eye tried to outsource the annotation work to other vendors but, due to poor quality, high costs, and restrictive tooling, decided not to do so." - CloudFactory Case study